What is Deepseek R1? To answer your question let’s know about a little history. It was not very long ago, when OpenAI released its large language model ChatGPT o1 version. It’s capable of solving very complex problems that normal ChatGPT versions couldn’t do. It is able to think to the level of a PhD student. However, for most users it is not available for free. With only a premium subscription to ChatGPT, you can gain access to this model with limited usage.

There were no free open source models available comparable to this. However, things have changed a few days ago. A Chinese company called Deepseek released its free open source model R1 that has almost the same performance of o1 in many metrics when benchmarked. And the most interesting part is, you can install it on your own computer with very little technical knowledge. Let’s get started.

Installing on your own computer

There are many ways you can run it on your computer for free like Ollama, Huggigface, PyTorch etc. For HuggingFace and PyTorch you need a working knowledge of Python. But don’t worry, you will get what you were promised. If you cannot write a single line of Python, you are good to go. We will be using Ollam throughout this tutorial and it doesn’t require any programming background.

Choosing the parameters

Deepseek R1 comes with several variations and you can download any of them. They are-

- Deepseek R1 – 1.5b Parameters (1.1 GB Download Size)

- Deepseek R1 – 7b Parameters (4.7 GB Download Size)

- Deepseek R1 – 8b Parameters (4.9 GB Download Size)

- Deepseek R1 – 14b Parameters (9 GB Download Size)

- Deepseek R1 – 32b Parameters (20 GB Download Size)

- Deepseek R1 – 70b Parameters (43 GB Download Size)

- Deepseek R1 – 671b Parameters (404 GB Download Size)

Don’t get frightened by the technical jargons if you don’t understand them. The more parameter a model has the better it performs and better is its response. However, with higher parameters you will need more computing resource to run it. Models with higher parameters will run slower compared to the ones with lower parameters. With RTX 3090, you can easily run 7b model very fast. And without any GPU, the 1.5b should run (it will be a little slow but still enough). You can choose whatever you wish to install

Installing Ollama

Ollama is a easy to use software that lets you download various LLM models from the cloud and use them locally. Download this very useful software from here.

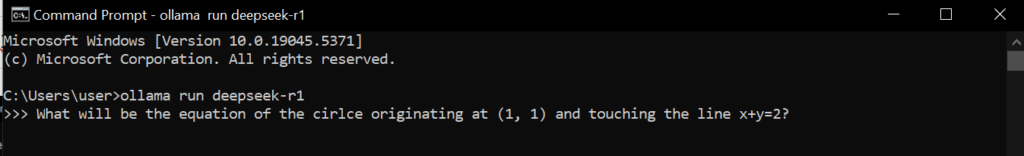

After you have installed Ollama, it should be accessible from the Command Line (or Terminal if you are using UNIX based systems). Then run the following command (shown in the image). Feel free to change the parameter according to your need.

ollama run deepseek-r1:7b

It will take some time to download depending on your internet connection speed and stability. Please wait for it to finish. After it is downloaded, you are done. It’s that simple. Let me ask it a reasoning question,

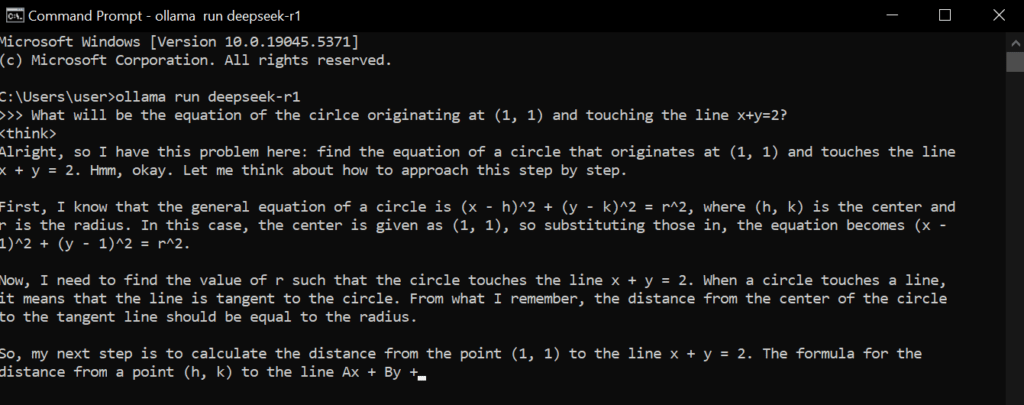

It’s thinking

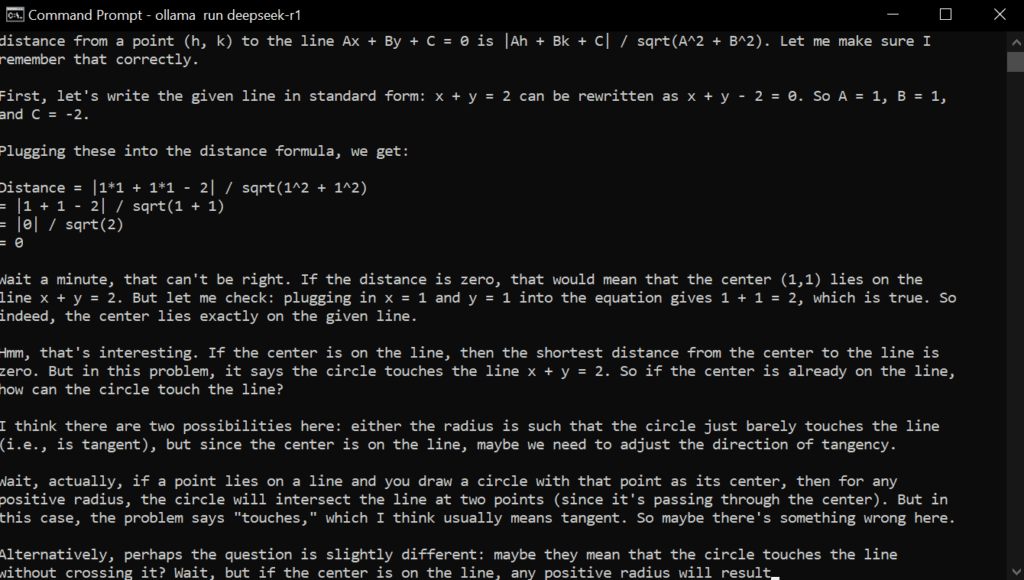

Meanwhile thinking, it successfully figured out the tricky part of the question,

It’s actually doing quite well. As an open source model that is available on the GitHub, and HuggingFace that’s giving groundbreaking result. And it’s working without any internet connection at all. I was so much excited when I’ve tried this out first.

What’s next?

You can try doing different things with AI. It’s very productive for your life. When going far from the city on a countryside where Internet is not available, or in any problem Deepseek R1 will help you find answers to your questions easily. You can keep learning from it even then. You can copywrite, generate your contents using AI. There are many ways to earn from it. You can check my earning with Kindle Direct Publishing using AI tutorial if you wish. Thanks for reading so far. I hope I will come with more tutorials for that very soon. Till then, bye bye.